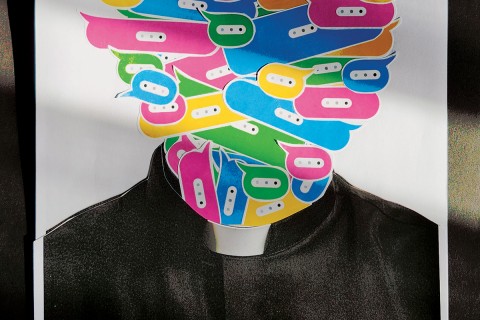

Devil in the algorithm

Social media platforms are damaging democracy, and it’s not primarily about what speech they do or don’t moderate.

Elon Musk’s acquisition of Twitter has left people anxious about the social media platform’s future. Will it become a cesspool of disinformation and hate? Will Donald Trump come back? Will Musk’s emphasis on uninhibited speech create an environment that inhibits meaningful conversation?

These are important questions. But when it comes to social media’s impact on civic life, the core issue is not free speech versus moderation. “Free speech is fundamentally about neutrality with regard to content,” writes journalist Matthew Yglesias in his newsletter—and “Twitter is not a neutral platform.” The problem is the algorithm that determines which public posts users see on Twitter (or their Facebook news feed) in the first place. An engagement algorithm is biased toward whatever motivates people to do ever more posting, replying, liking, and sharing. And it’s become clear that such algorithms have done a great deal to erode the reliability of public information and the norms of civil discourse.

The algorithms aren’t designed to promote high-quality conversation, of course. They’re designed to maximize profits for the powerful people who control them. The platforms tend to insist that their algorithms also serve users well, though they don’t offer much transparency as to how.